Five Steps for Performing Due Diligence on An Acquisition Target's Use of Generative AI

07.17.23

Generative Artificial Intelligence (Generative AI), such as ChatGPT and similar predictive text artificial intelligence models, is currently a hot topic. Businesses are looking for ways to implement it into their systems, while lawyers are cautious of the risks involved. No matter your view on it, Generative AI is becoming mainstream and buyers of businesses need to take steps as part of their due diligence to understand and manage the risks associated with this new technology. The goal of this article is to provide a starting point for a buyer’s due diligence efforts related to Generative AI, to help buyers better allocate that risk between buyer and the target or adjust purchase price as appropriate.

- Identifying the risks of Generative AI. The first step in any due diligence inquiry is understanding the potential risks. A buyer cannot protect itself if it does not have a sufficient understanding of potential risks. The risks associated with Generative AI are still unfolding, but any potential buyers should at least be concerned with the following:

- Intellectual property ownership rights. The ownership rights of the product of Generative AI is still unclear. Generative AI is ultimately a derivative product. The use of the product created by a Generative AI service creates questions of whether the original author’s intellectual property rights have been violated. Further, the Generative AI service may reproduce similar, if not identical, results to multiple users, which can create a legal quagmire.

- Disclosure of confidential information/loss of information rights. Information disclosed to Generative AI services may jeopardize the confidentiality of the information disclosed, which may cause business risk and ultimately affect the value of the target.

- Improper reliance upon Generative AI. Generative AI can be a great efficiency resource, but Generative AI is not perfect and is known to create inaccurate results. Failure to provide proper oversight, overuse and excess reliance can be an indication of a larger business issue. A good example of this comes from the legal industry, where a lawyer was sanctioned by a court for relying on Generative AI for his legal research. This lawyer filed legal precedent with the court without taking the time to verify its accuracy, which unfortunately for him was all fabricated by the Generative AI service and did not actually exist.

- Non-Compliance with privacy and other governing laws. There has been an overwhelming trend towards increasing privacy rights, both in the U.S. and abroad. This trend will continue, and, much like with confidential information, the disclosure of private information may be deemed improper under those law. This is particularly true if the target operates in an industry where sensitive information is given heightened protections under law (for example, health care, accounting, and insurance). If the use of Generative AI results in an improper disclosure, the target may be in violation of these privacy laws and can create substantial consequences.

- Contractual rights. The use of Generative AI by the target needs to be evalutated to determine whether the contractual rights violate the terms of a target’s commercial contracts, such as confidentiality provisions, which creates legal risk for the breach of those agreements.

- Business risk of non-use. As the marketplace increasingly adopts Generative AI, a target’s failure to do so can put that business at a competitive disadvantage that may affect the purchase price or desirability to acquire the target.

There may be other business and legal risks involved, and those should be determined and considered as part of a buyer’s due diligence.

- Create an Inventory of the Target’s Use of Generative AI. Once a buyer has an idea of the potential risks, it should create an inventory of the target’s use of Generative AI. This list should include, at a minimum:

- The generative AI resources being used;

- The manner in which those resources are being used; and

- The categories of information disclosed in the use of Generative AI

A common hurdle to proper diligence is a reluctance from the target’s management to provide this type of detailed operational information. Obviously, a fulsome analysis requires the deepest level of inquiry. But in practice, a target’s reluctance to respond is paired with the buyer’s push to close forcing the deal to close on imperfect information. This translates to gaps in information and additional risk to the buyer. To manage this risk, the target’s industry and the manner of potential use should be considered when determining the scope of a buyer’s inquiry.

- Review Employee Policy governing Generative AI use. While businesses and lawyers are still grappling with how to respond to the changing environment, a common response has been the adoption of an employee policy governing the use of Generative AI. A review of the target’s Generative AI policy can provide a buyer with an early level of comfort, as a well-crafted, bespoke policy demonstrates a concerted effort by the target’s management to manage this risk. Likewise, however, a lack of a policy could be an indication that no thought has been given by management to managing the risk associated with Generative AI.

The target’s policy should be viewed to verify that the use of Generative AI complies with laws and utilizes it in a manner that is appropriate for the target’s industry. Much like any other employment policy, though, implementation is only half the battle. To properly assess risk, a buyer will also need to compare the policy with actual company practice. With a proper inventory, this practical risk can be assessed quickly.

- Review Terms of Service for the Generative AI Services Used. Each Generative AI service has its own terms of service, and it is imperative that the terms of service are being followed. If not, the continued use of the Generative AI service could be in jeopardy and the ownership of any content generated by the Generative AI service, to the extent any exist, may not be transferred to the target.

- Review laws and Agreements governing information disclosed to Generative AI Services. A buyer needs to know what rules govern a target’s use to understand the risk related to it. Those rules can be created by law, regulation or agreement. This is obvious to experienced buyers, but not necessarily as it relates to Generative AI. Buyers should look to the industry of the target for privacy rules that may be impacted, as well as general privacy rules. Private agreements should also be reviewed. While this concern would certainly be part of a buyer’s general commercial contract diligence, the Generative AI component can easily be overlooked without special care – particularly if the reviewer does not have the benefit of the Generative AI inventory referenced above.

While these steps provide general guidance for performing due diligence on an acquisition target, increased care and additional steps may be considered necessary, particularly for those targets operating in regulated industries.

If you have any questions about this alert, please reach out to David A. Johnson, Jr. or the Aronberg Goldgehn attorney with whom you normally work.

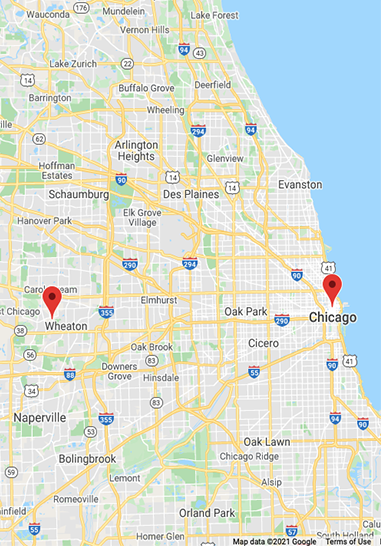

225 W. Washington St.Suite 2800Chicago, Illinois 60606

301 S. County Farm RoadSuite AWheaton, Illinois 60187